CASE STUDY: Extracting data from ADL's "Antisemitic Incidents and Anti-Israel Rallies" map

On Saturday, I published an article for The Intercept about how the Anti-Defamation League has included dozens of Gaza ceasefire protests that were organized by progressive Jews in its map of "antisemitic incidents and anti-Israel rallies," alongside genuine antisemitic vandalism, harassment, and assault by neo-Nazis.

ADL doesn't publish its raw data, but I managed to get it anyway while doing this reporting. In the spirit of sharing data journalism skills, I will show you exactly how I extracted it from the map.

Several thousand Jews and their allies occupied Grand Central Station on October 27 demanding a ceasefire in Gaza -- the largest sit-in in NYC since the Iraq War protests. This is one of the "anti-Israel rallies" that ADL put on its map alongside neo-Nazi hate. Credit: @jewishvoiceforpeace on Instagram

Several thousand Jews and their allies occupied Grand Central Station on October 27 demanding a ceasefire in Gaza -- the largest sit-in in NYC since the Iraq War protests. This is one of the "anti-Israel rallies" that ADL put on its map alongside neo-Nazi hate. Credit: @jewishvoiceforpeace on Instagram

In my article I wrote:

While ADL doesn’t distribute its raw data in a usable format, when you load the map in a web browser, behind the scenes your browser downloads a copy of it. By monitoring what my browser downloaded while loading the map, I was able to extract a copy of the data and save it as a spreadsheet. The raw data is full of duplicates. After de-duplicating it, I ended up with a spreadsheet with 1,163 “antisemitic incidents and anti-Israel rallies.”

In this post I explain how I found the data in the HTML of ADL's map in JSON format, how I converted it into a spreadsheet, and how I de-duplicated this spreadsheet, including with the help of the AI systems GitHub Copilot and ChatGPT's GPT-4. If you follow along, you'll end up with a usable copy of ADL's dataset yourself.

While I'm at it, I'll point to the different chapters in my book Hacks, Leaks, and Revelations that provide further reading for each topic. (The official release date of my book is in early January 2024, but if you're in the U.S. and order it directly from the publisher, they should start shipping it at the end of this month.)

You can find ADL's map here: https://www.adl.org/stand-with-israel

Downloading ADL's map data

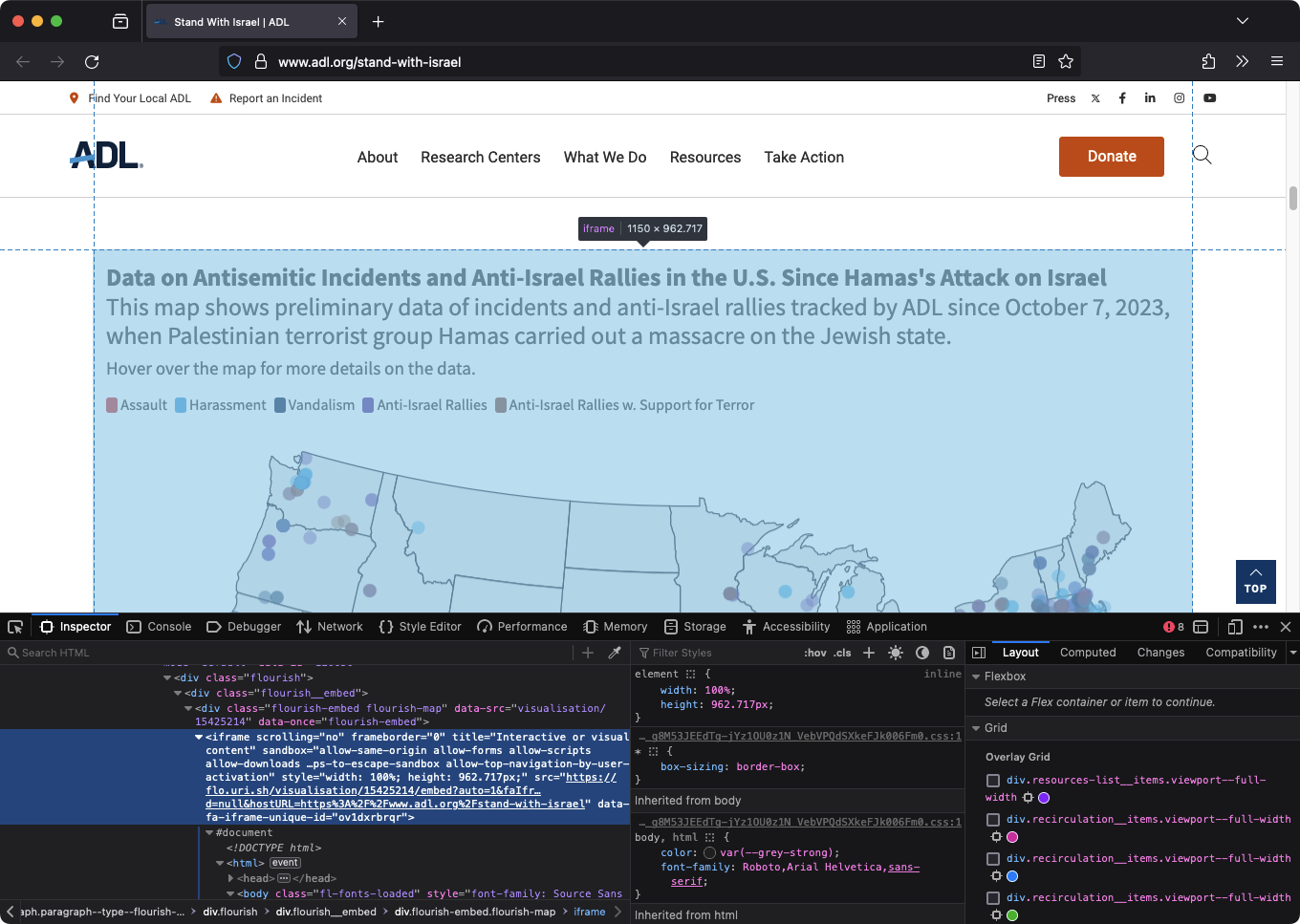

If you load ADL's Stand With Israel page and scroll down, you'll find the map full of data points. By using my browser's web developer tools, it was quickly clear that this map itself is an iframe:

The iframe is loaded from this URL: https://flo.uri.sh/visualisation/15425214/embed?auto=1&faIframeUniqueId=ov1dxrbrqr&jsid=null&hostURL=https%3A%2F%2Fwww.adl.org%2Fstand-with-israel

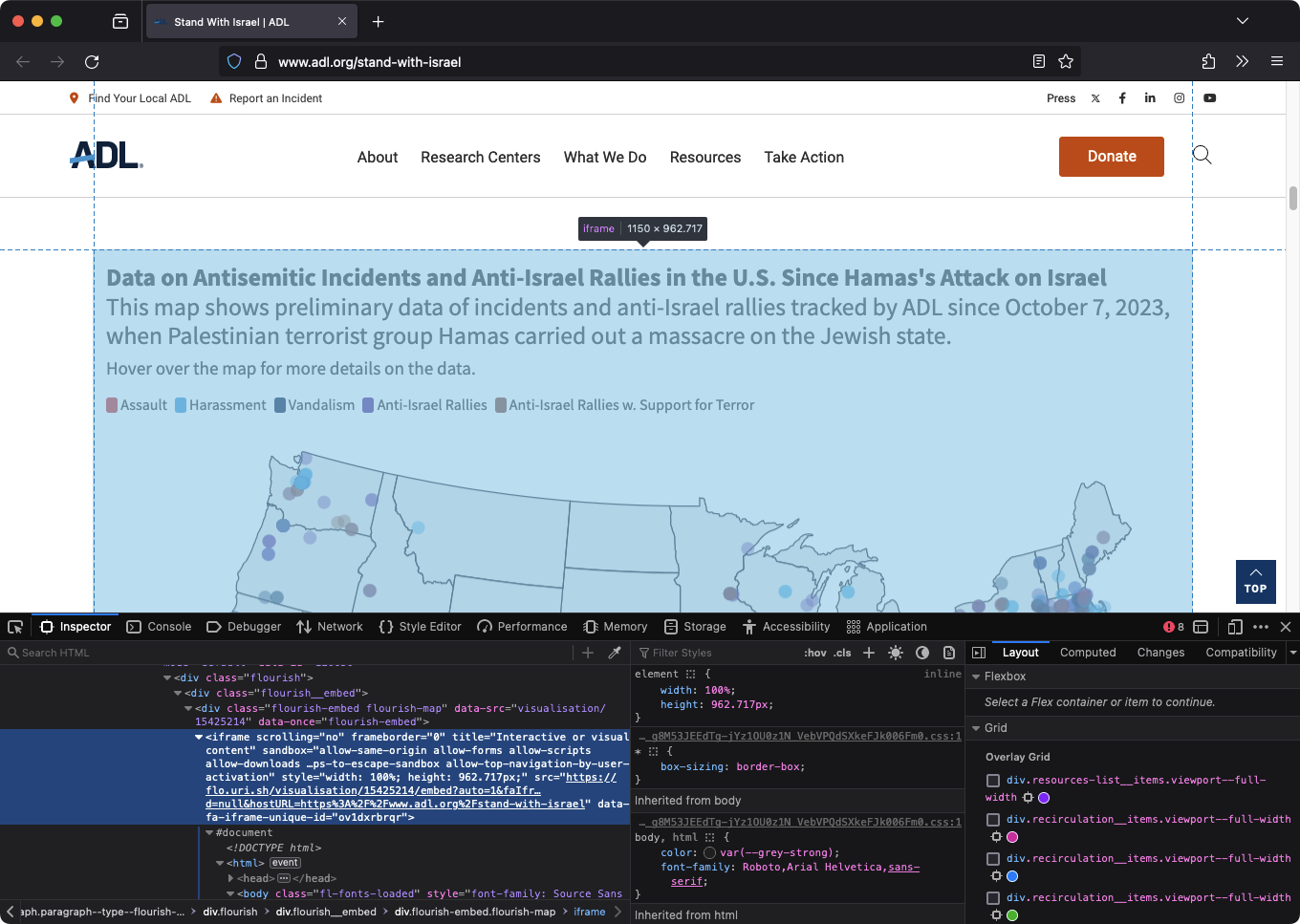

If you load that URL directly, you'll see just the map, with all of ADL's data visualized on it:

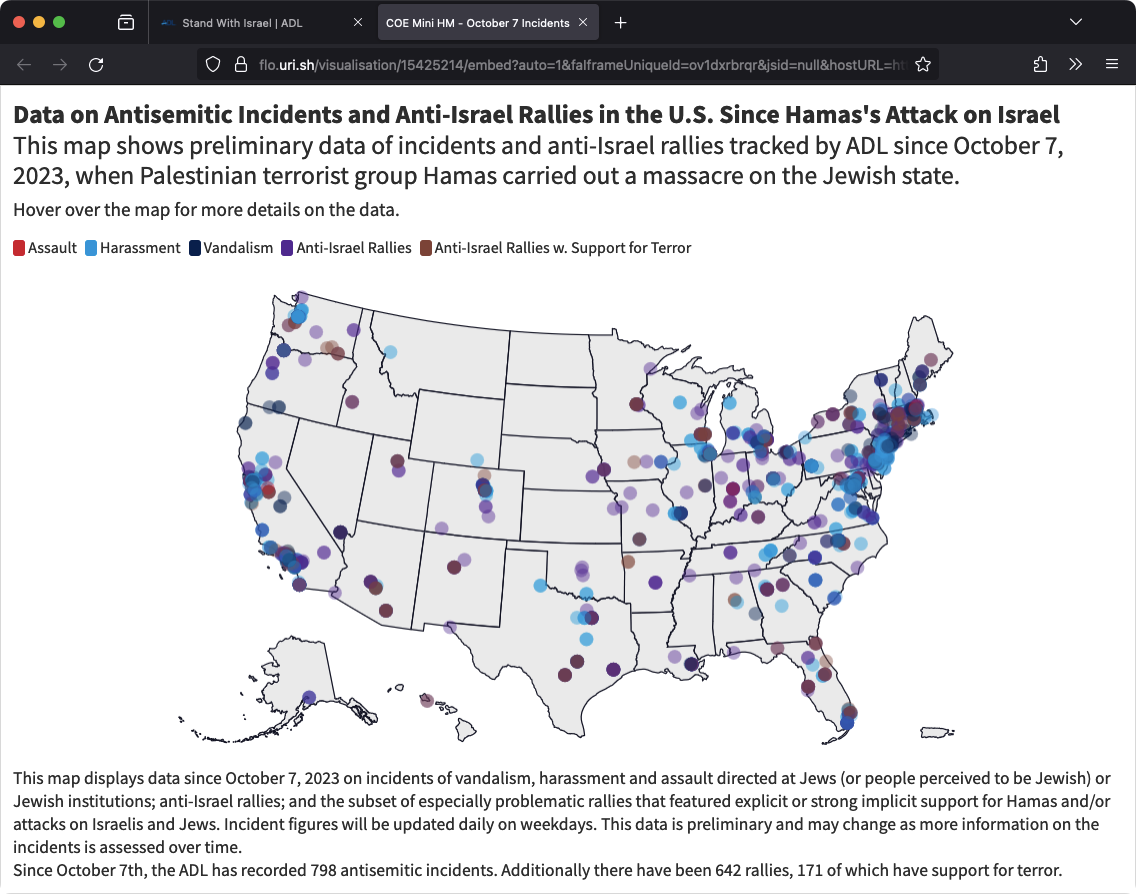

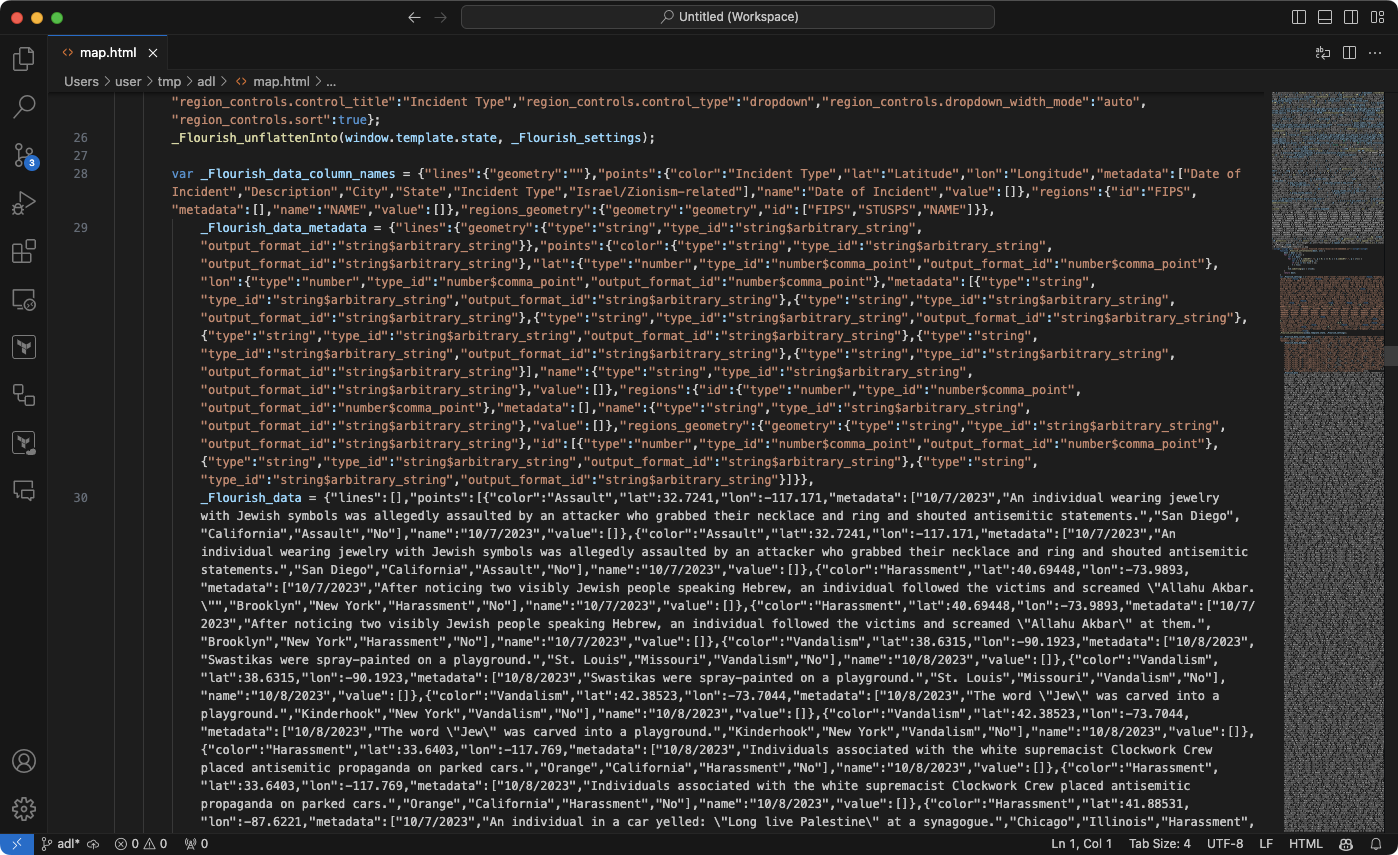

If you view the source, you'll see that the HTML includes JavaScript which has a copy of the raw data in it, in the variable _Flourish_data:

This HTML page scrolls horizontally -- there is a lot of data here, all stored in that one variable. Let's take a look at it in a text editor where I can turn on word wrapping. First, I download a copy of the HTML by using wget in a terminal:

wget -O map.html "https://flo.uri.sh/visualisation/15425214/embed?auto=1&faIframeUniqueId=ov1dxrbrqr&jsid=null&hostURL=https%3A%2F%2Fwww.adl.org%2Fstand-with-israel"

This command downloads that URL and saves it in the file map.html. Then, I can open it in Visual Studio Code by running:

code map.html

Chapters 3 and 4 of my book introduce you how to use the terminal, and the wget command, to do work just like this. It's designed for beginners who have never touched the command line before, and might even be scared of it. It also shows you how to start working with VS Code as a text editor.

After downloading it, I can tell that map.html is about 1MB, and most of this disk space is used up by giant JSON objects defined inside the JavaScript. Here's a screenshot of viewing map.html in VS Code:

Viewing the JSON data

You can already tell that the raw data is here, but it's incredibly difficult to read. To make it easier, I'll copy line 30 (which is the line that sets the variable _Flourish_data to a huge JSON object) to my clipboard and paste it into its own file. This line starts with:

_Flourish_data = {"lines":[],"points":[{"color":"Assault","lat":32.7241,"lon":-117.171,"metadata":["10/7/2023","An individual wearing jewelry with Jewish symbols was allegedly assaulted by an attacker who grabbed their necklace and ring and shouted antisemitic statements."

I deleted the _Flourish_data = at the beginning, so that this file contains nothing but JSON data, and now starts like this:

{"lines":[],"points":[{"color":"Assault","lat":32.7241,"lon":-117.171,"metadata":["10/7/2023","An individual wearing jewelry with Jewish symbols was allegedly assaulted by an attacker who grabbed their necklace and ring and shouted antisemitic statements."

I then saved this file as data.json. In the bottom-right of the VS Code window it says "JSON," which means that my text editor thinks this is JSON file. With the data.json tab selected, I press Command-Shift-P, and type "format document", press enter, and save. This formats the JSON document with spacing that makes it reasonable for humans to read.

After formatting, it takes up 23,267 lines (at least it does for me right now as I'm writing this -- ADL is continually adding data to their map, so there will likely be more lines for you if you follow along). Here's how it starts:

{ "lines": [], "points": [ { "color": "Assault", "lat": 32.7241, "lon": -117.171, "metadata": [ "10/7/2023", "An individual wearing jewelry with Jewish symbols was allegedly assaulted by an attacker who grabbed their necklace and ring and shouted antisemitic statements.", "San Diego", "California", "Assault", "No" ], "name": "10/7/2023", "value": [] },

This is what formatted JSON data looks like.

Chapter 11 of my book teaching you how to work with data in the JSON file format, using the Parler dataset of over a million videos uploaded to the far-right social networking site Parler, including thousands of videos of the January 6, 2021, insurrection at the US Capitol.

JSON is everywhere, so if you want to do this type of work, it's important to understand how it works and how to work with it.

This JSON objects has a lines key that's an empty array, followed by a points key that is an array of points. Each point looks like it's an incident on ADL's map. It includes a color (which is a category, like "Assault"), lat and lon GPS coordinates, metadata about the incident, a name (which is just the date, like "10/7/2023"), and a value that seems to be an empty array.

The metadata is an array with 6 strings in it. It's pretty clear what most of them mean, but not so much the last item. Here's the metadata from above:

"metadata": [ "10/7/2023", "An individual wearing jewelry with Jewish symbols was allegedly assaulted by an attacker who grabbed their necklace and ring and shouted antisemitic statements.", "San Diego", "California", "Assault", "No" ],

It looks like this metadata is ordered like this:

- date

- description

- city

- state

- category

- some boolean value of "Yes" or "No"

I looked back at the map.html code and saw that there's also a JavaScript variable defined called _Flourish_data_column_names. Doing the same thing -- copying and pasting it into its own file in VS Code, and then formatting it, here's what that variable contains:

{ "lines": { "geometry": "" }, "points": { "color": "Incident Type", "lat": "Latitude", "lon": "Longitude", "metadata": [ "Date of Incident", "Description", "City", "State", "Incident Type", "Israel/Zionism-related" ], "name": "Date of Incident", "value": [] }, "regions": { "id": "FIPS", "metadata": [], "name": "NAME", "value": [] }, "regions_geometry": { "geometry": "geometry", "id": [ "FIPS", "STUSPS", "NAME" ] } }

And there's the key that describes this data. The metadata array is in this order:

- Date of Incident

- Description

- City

- State

- Incident Type

- Israel/Zionism-related

Converting the JSON to a CSV spreadsheet

I have the raw data that's used to draw points on ADL's map, but it would be considerably easier to read and work with if it were in a spreadsheet format rather than a JSON array of points. So, I wrote a simple Python script to convert this into a CSV spreadsheet.

Chapters 7 and 8 of my book include a basic introduction to Python programming -- intended for total newbies who have never written code before -- in order to do simple data analysis tasks just like this. And Chapter 9 of my book goes into detail on working with spreadsheets in CSV (comma-separated value) format -- making use of the hundreds of CSV files in the BlueLeaks dataset, hacked from police departments in the middle of the 2020 Black Rights Matter uprising.

CSVs are the simplest type of spreadsheet, and like JSON data, CSV data is everywhere.

Here's the first version of the Python script that I wrote, called extract-incidents.py:

import json import csv def main(): # Load html from file with open("map.html") as f: html = f.read() # Extract data from HTML for line in html.split("\n"): if "_Flourish_data = " in line: data_json = line.replace("_Flourish_data = ", "") data_json = data_json.strip()[:-1] # strip trailing comma data = json.loads(data_json) # Save data points as CSV rows = [] headers = [ "Date of Incident", "Description", "City", "State", "Incident Type", ] with open("adl-data.csv", "w") as f: writer = csv.DictWriter(f, fieldnames=headers) writer.writeheader() for point in data["points"]: row = { "Date of Incident": point["metadata"][0], "Description": point["metadata"][1], "City": point["metadata"][2], "State": point["metadata"][3], "Incident Type": point["metadata"][4], } rows.append(row) writer.writerow(row) print(f"Number of rows: {len(rows)}") if __name__ == "__main__": main()

My script works like this:

- It opens

map.htmland loads its contents into a variable calledhtml. - It searches each line in the

htmlvariable for the string_Flourish_data =, and if it finds it it does a bit of string slicing to just pull out the JSON string containing of the data, saving it in the variabledata_json. - It then loads the raw data into the variable

databy setting it equal tojson.loads(data_json)-- so now,datais a Python dictionary containing all of the raw data. - It uses

csv.DictWriter()to create a new CSV file calledadl-data.csvwith the column headers set to the same fields in the metadata (except for "Israel/Zionism-related", I decided to leave that one out of my spreadsheet). - It loops through all of the points in the map (

data["points"]), and for each point writes a row to the new CSV. - It displays the number of rows in the final CSV that it created.

When I just ran it, and I got 1,502 rows:

% python3 extract-incidents.py

Number of rows: 1502

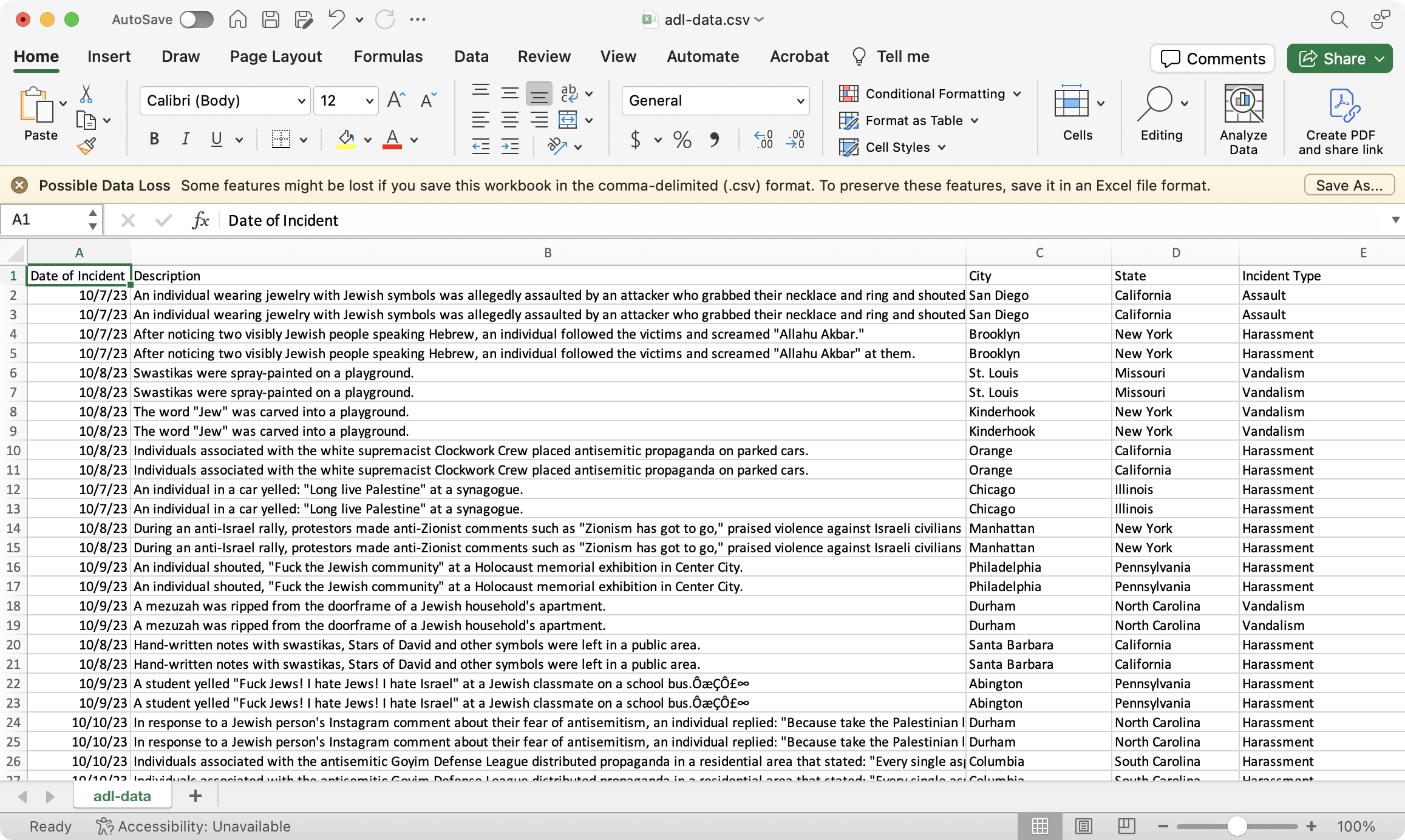

Now I have a file called adl-data.csv. Here's what it looks like when I open it in Excel:

The first thing that's obvious is that this data is full of duplicates. But I'll deal with that in a minute.

CSV files are the simplest spreadsheet format, and that don't contain any of the advanced features of spreadsheet software like Excel, LibreOffice Calc, or Google Sheets. So this is how I work with the spreadsheet next:

- I save this file in Excel format (

.xlsx). - I click View at the top, and click Freeze Top Row. This freezes the top row because it contains column headers, allowing me to scroll down and still see the headers.

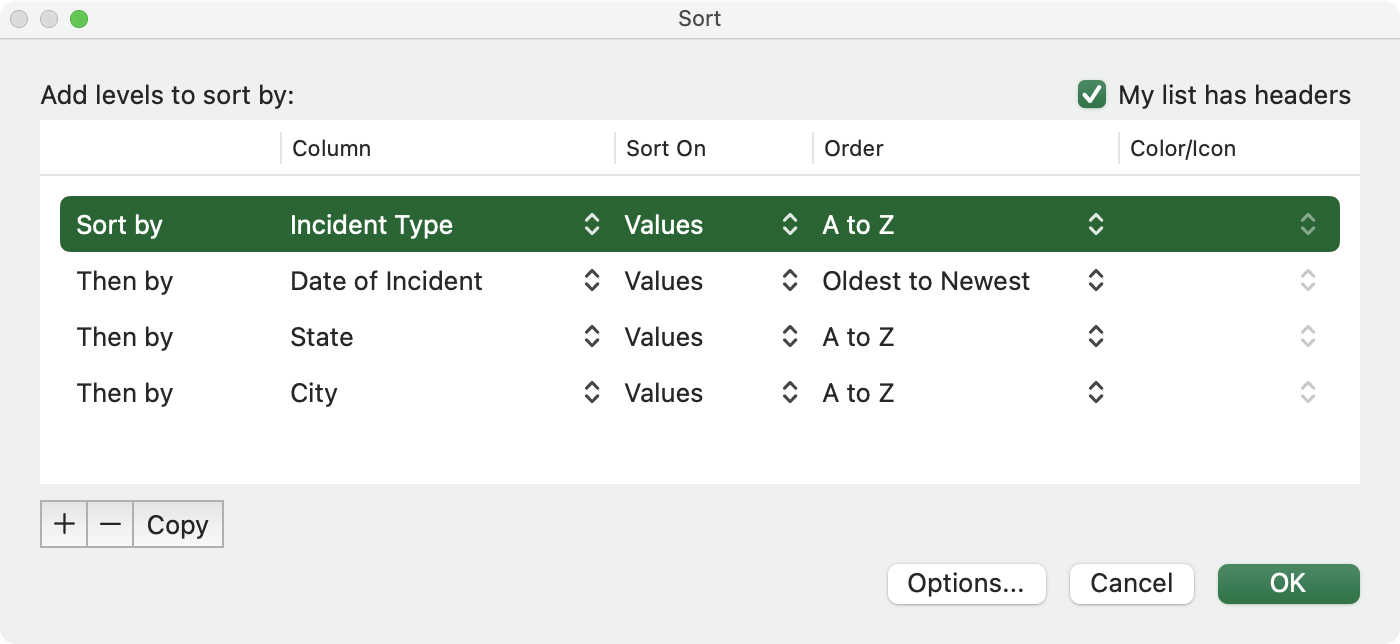

- I click Data at the top, and then I click Sort. This allows me to sort the spreadsheet first by one column, then by another, and so on. While doing this reporting, I frequently re-sorted the spreadsheet in order to easily find specific rows. In this case, I'm sorting by Incident Type, then Date of Incident, then State, then City.

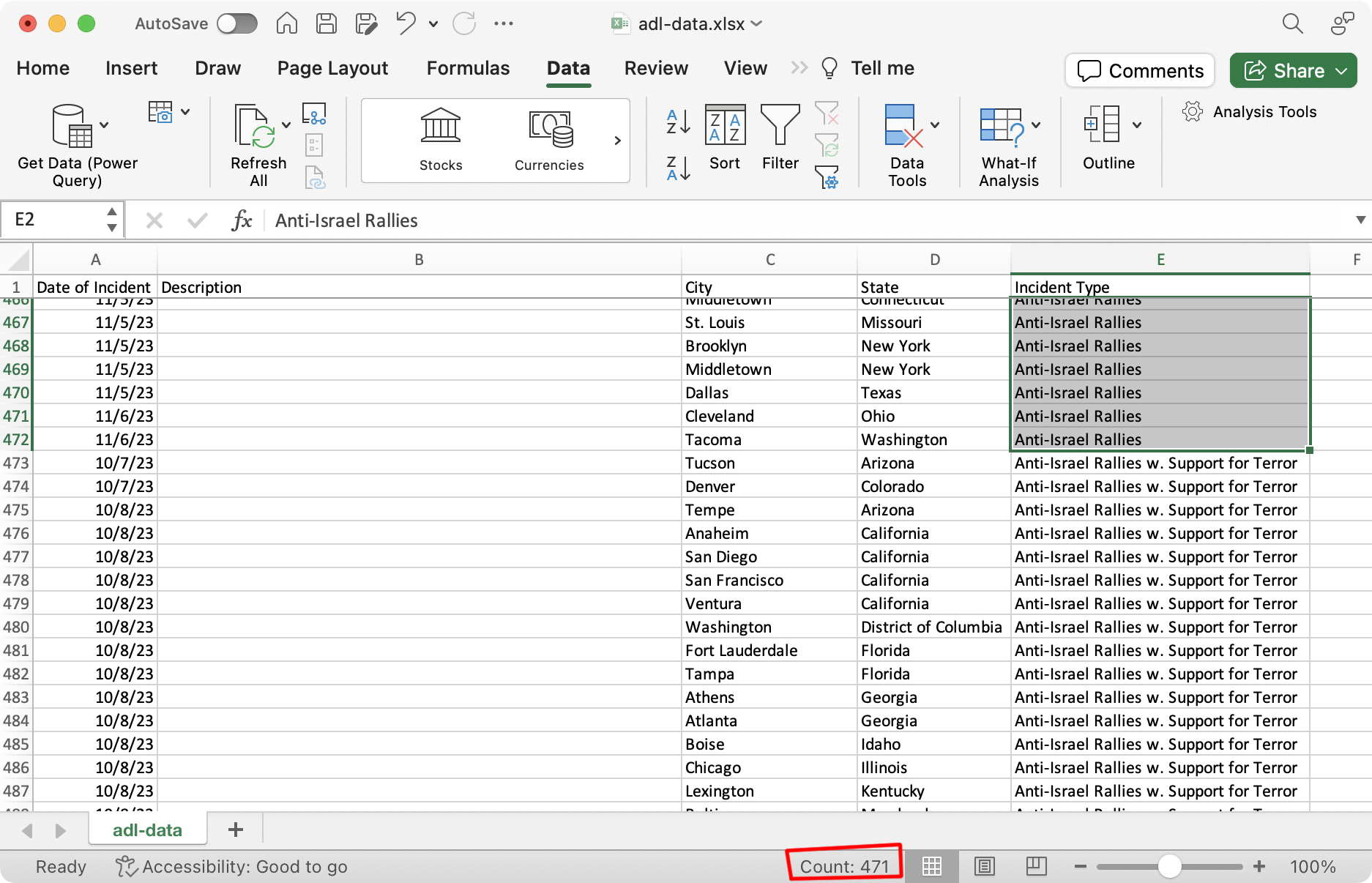

Now that it's sorted by Incident Type, I can that Anti-Israel Rallies is the type at the top.

I immediately see that the "Anti-Israel Rally" rows don't include descriptions -- they just have dates, cities, and incident types. Since the outbreak of the Israel-Gaza war, the anti-war movement has surged and some cities have seen multiple protests and direct actions calling for a ceasefire on the same day, organized by different groups. ADL's data doesn't make it clear which specific event each row is referring to. While I discovered dozens of Jewish-organized peace protests that match the dates and cities in ADL's data, it's impossible to know for sure if those are the specific "Anti-Israel Rallies" that ADL is referring to without asking them. An ADL spokesperson confirmed to me that most (but not all) of a set of 10 specific Jewish-organized protests I sent them were on their map -- some were not though, so ADL was referring to different events.

Counting rows in the spreadsheet

Here's how I determine how many rows of each type of incident this data contains.

I click on a column in the first "Anti-Israel Rally" row at the top of the spreadsheet, then scroll down until I see the last "Anti-Israel Rally" row, hold Shift, and click on a column in that row. This selects all of the "Anti-Israel Rally" rows. At the bottom of the Window in Excel, I can see that I have selected 471 rows.

Repeating these steps with the other incident types, I found that this data includes the following number of rows for each incident type:

- Anti-Israel Rally: 471

- Anti-Israel Rally w. Support for Terror: 171

- Assault: 41

- Harassment: 576

- Vandalism: 243

However, this data isn't quite accurate yet because I haven't taken account of the obvious duplicates.

Looking into the duplicates

Looking back at the Assault, Harassment, and Vandalism data, it's clear that there are duplicates. For example, there are two rows that have these exact same values:

- Date of Incident: 10/12/23

- Description:

A student posted a photo of another student performing a Nazi salute with the caption "These Jewish kids better watch themselves on Halloween" on a Snapchat story, which was visible to Jewish classmates.

- City: Everett

- State: Washington

- Incident Type: Harassment

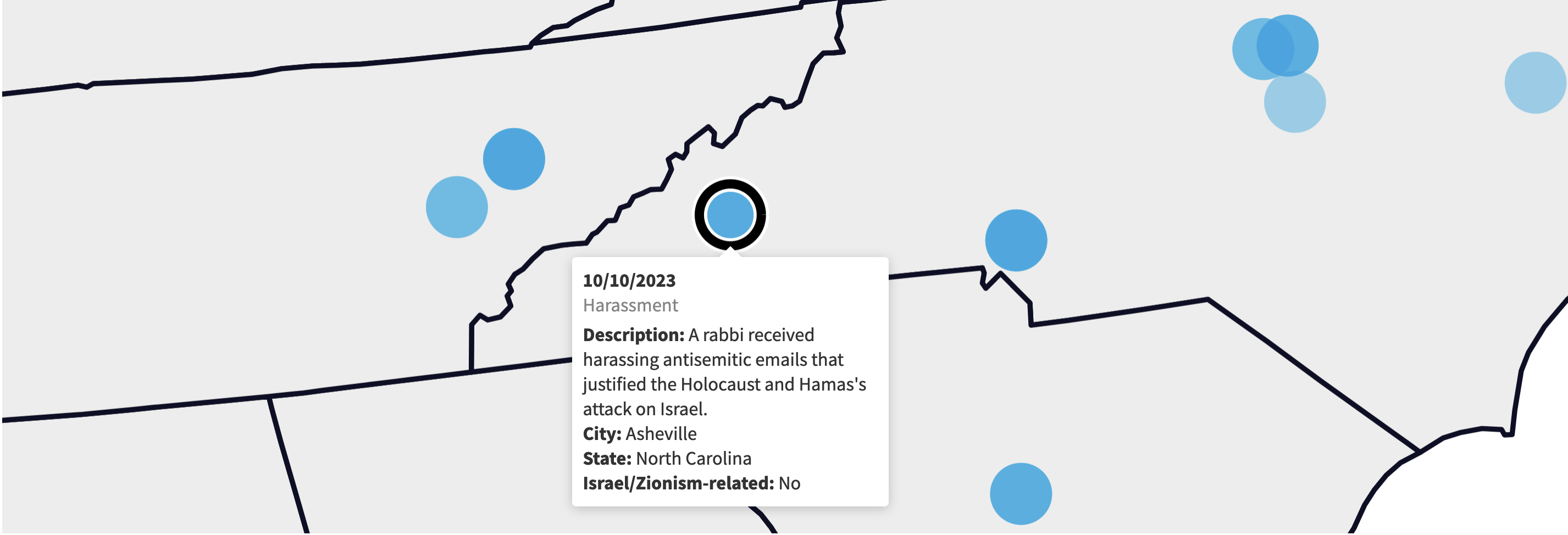

Since these two rows are exactly the same, they are obviously duplicates. However, there are rows where the descriptions don't match exactly, but are so similar that they appear to be duplicates anyway -- it looks like maybe the description was updated to fix a typo, and the old data point remained after the fix. For example, there are two rows that both document Harassment that occurred October 10 in Asheville, NC, but one row has this description:

A rabbi received harassing antisemitic emails that defended the Holocaust and Hamas's attack on Israel.

And another has this description:

A rabbi received harassing antisemitic emails that justified the Holocaust and Hamas's attack on Israel.

Even though one says "defended" and the other says "justified", I think that these rows are duplicates of the same incident, and when someone at ADL updated the description it kept the original row in the dataset.

Note that I don't think this means ADL is miscounting the antisemitic incident data that it's reporting. Everything I've seen shows that ADL does not appear to be counting duplicates in its statistics, they just happen to appear on the map for some reason.

If you load ADL's Stand With Israel map, zoom in on Asheville, NC, and toggle off all of the categories except Harassment, you'll see a blue dot over Asheville. When you mouse-over it, it shows this incident, with the word "justified" instead of "defended".

I then opened map.html in VS Code and searched for this incident in the HTML. I found that the enormous _Flourish_data line includes this piece of data (which I've formatted using VS Code, so that you can actually read it):

{ "color": "Harassment", "lat": 35.60271, "lon": -82.5673, "metadata": [ "10/10/2023", "A rabbi received harassing antisemitic emails that justified the Holocaust and Hamas's attack on Israel.", "Asheville", "North Carolina", "Harassment", "No" ], "name": "10/10/2023", "value": [] },

I deleted that from _Flourish_data, saved map.html, and then opened map.html in my browser so I could view the modified version of this HTML file locally. Again, I zoomed into Asheville and toggled off all the categories except Harassment. This time, the circle at Asheville is a slightly lighter shade of blue, and when I mouse-over it, it shows the other duplicate incident, with the word "defended" instead of "justified":

By deleting bits of data from map.html and then refreshing the page to view the map, I discovered a few things:

- All of the points in this data are in fact getting mapped, even the duplicates.

- The darker the circle on the map, the more points are drawn over each other -- this may be because of duplicates, or because of multiple incidents (perhaps on different dates) in the same city.

- If multiple points are drawn in same city, ADL's interface only allows you to look at the top-most point that was drawn -- the other incidents are technically drawn on the map, but you can't mouse-over them because the top point is in the way. You can't see them, but they're there.

Now that I have an understanding of how the duplicates in this dataset work, I need to de-dupe it.

De-duplicating the data

To de-dupe the data, I wrote some more Python code, this time with the help of AI -- specifically, GitHub Copilot (code-writing AI that you can integrate into VS Code) and ChatGPT. I could have done it without AI, but doing it with AI saved a lot of time.

My Python script so far loads map.html, extracts the data, and saves it as adl-data.csv. Every row of data in the spreadsheet is saved in a variable called rows. To de-dupe the data, I want to remove all of the duplicates from the rows list and then save that into a new, de-duped version of the CSV.

I decided to start with the easy ones and de-duplicate exact matches. Since I have GitHub Copilot set up and logged into my VS Code, I just wrote a comment saying something like:

# This function takes in a list of dictionaries, and returns a de-duplicated list, # where a duplicate is an item where all of the keys in its dictionary are exactly # the same as another item.

And, lo and behold, GitHub Copilot auto-suggested the following function for me:

def dedupe_exact_matches(items, key): seen = set() result = [] for item in items: val = tuple(item[k] for k in key) if val not in seen: seen.add(val) result.append(item) return result

I added this function to my script, and then after saving adl-data.csv, I added the following code:

# Dedupe rows = dedupe_exact_matches(rows, headers) print(f"Number of rows after de-duping exact matches: {len(rows)}") # Save the de-duped CSV with open("adl-data-deduped.csv", "w") as f: writer = csv.DictWriter(f, fieldnames=headers) writer.writeheader() for row in rows: writer.writerow(row)

When I run my script, here's the output:

% python3 extract-incidents.py

Number of rows: 1502

Number of rows after de-duping exact matches: 1263

I can see that 239 rows, all exact duplicates, were deleted. The script saves the file adl-data-deduped.csv. When I open it, I can see that many of the duplicates are gone, however there are still duplicates that aren't exact matches.

For example, after opening adl-data-deduped.csv in Excel, I can see that there are duplicate rows from an incident in Clifton, New Jersey on October 7. One of them has the description:

An individual shouted ?Long live Hamas? at a synagogue during Simchat Torah celebrations.

The other has the description:

An individual shouted "Long live Hamas" at a synagogue during Simchat Torah celebrations.

The first one used question marks instead of quotes, while the second one used quotes -- this looks like another example of someone correcting a typo in the description of an incident, but instead just adding a second incident.

At this point, I could have chosen to manually de-dupe the rest of the spreadsheet and leave at that. And, at first, this is exactly what I did: In Excel, I sorted the spreadsheet so that potential duplicates would be next to each other, and then looked through each of the 1,263 rows trying to find duplicates, and deleting one of them when I found them.

But since this reporting took me a little over a week, and since ADL was constantly updating their map, and every day activists took to the streets in dozens of new Palestinian solidarity protests (or "anti-Israel rallies," in ADL's language), I realized that I'd want to reload the map and get later versions of the dataset, and I didn't want to have manually de-dupe the spreadsheet again each time I did this.

I figured it must be possible to measure a numeric "distance" between two descriptions. If the distance was small enough (if the descriptions were almost the same), then I could consider them duplicates. If the distance was large (if they're two totally different sentences), I could conclude that they're not duplicates. I wasn't sure the simple way to do this in Python though, so I asked ChatGPT. I have a ChatGPT Plus account, giving me account to GPT-4, which is considerably better at this sort of thing than the free GPT-3.5.

I asked GPT-4 the following query, using real examples of duplicates as well as non-duplicates from the ADL dataset:

Write a Python function for me called

is_duplicate(). This function should take two dictionaries, likerow1androw2, and determine if they are duplicate rows. It should return True if they're likely duplicates and False if they're not.First, it should compare all of the fields in the two rows, and if any of them other than

Descriptionare different, it should return False, as these are not duplicates.Then, it should measure the "distance" between the descriptions of these two rows (

row1["Description"] and row2["Description"]). If the descriptions are very similar, it should consider them duplicates. If the descriptions are not similar, it should consider them not duplicates.Here are descriptions that are similar enough to be duplicates:

- An individual shouted "Long live Hamas" at a synagogue during Simchat Torah celebrations.

- An individual shouted ?Long live Hamas? at a synagogue during Simchat Torah celebrations.

Here's another example of duplicates:

- After noticing two visibly Jewish people speaking Hebrew, an individual followed the victims and screamed "Allahu Akbar."

- After noticing two visibly Jewish people speaking Hebrew, an individual followed the victims and screamed "Allahu Akbar" at them.

And here's another example of duplicates:

- During an anti-Israel rally, protestors made anti-Zionist comments such as "Zionism has got to go," praised violence against Israeli civilians and displayed antisemitic symbols including a swastika and the flag of Hamas.

- During an anti-Israel rally, protestors made anti-Zionist comments such as "Zionism has got to go," praised violence against Israeli civilians and displayed antisemitic symbols, including a swastika.

Here's an example of descriptions that are NOT duplicates:

- Swastika graffiti was left on the garage door of a home.

- Swastikas were spray-painted on a playground.

Here's another example of descriptions that are NOT duplicates:

- An individual wearing a Nazi uniform entered a restaurant.

- Extremist individuals distributed antisemitic propaganda the Fort Worth Botanic Garden.

Here's the code that GPT-4 gave me:

import difflib def is_duplicate(row1, row2): # Check if any field other than 'Description' is different for key in row1: if key != 'Description' and row1[key] != row2[key]: return False # Compare descriptions using a simple similarity measure similarity = difflib.SequenceMatcher(None, row1['Description'], row2['Description']).ratio() # Define a threshold for similarity (e.g., 0.8) threshold = 0.8 return similarity >= threshold # Example usage row1 = {'Field1': 'Value1', 'Field2': 'Value2', 'Description': 'An individual shouted "Long live Hamas" at a synagogue during Simchat Torah celebrations.'} row2 = {'Field1': 'Value1', 'Field2': 'Value2', 'Description': 'An individual shouted ?Long live Hamas? at a synagogue during Simchat Torah celebrations.'} print(is_duplicate(row1, row2)) # Should return True for similar descriptions

I then created a new script to test GPT-4's code and make sure that it works, by passing in these same examples of real duplicates and real not-duplicates to see if it actually works as intended.

All of the following data, by the way, are actual antisemitic incidents tracked by ADL (note the stark difference between these and peace rallies organized by Jews demanding a ceasefire in Gaza).

Here's my test script:

import difflib def is_duplicate(row1, row2): # Check if any field other than 'Description' is different for key in row1: if key != "Description" and row1[key] != row2[key]: return False # Compare descriptions using a simple similarity measure similarity = difflib.SequenceMatcher( None, row1["Description"], row2["Description"] ).ratio() # Define a threshold for similarity (e.g., 0.8) threshold = 0.8 return similarity >= threshold print("The follow are real duplicates and should return True:") print(is_duplicate( { "Date of Incident": "10/7/23", "Description": 'An individual shouted "Long live Hamas" at a synagogue during Simchat Torah celebrations.', "City": "Clifton", "State": "New Jersey", "Incident Type": "Harassment", }, { "Date of Incident": "10/7/23", "Description": "An individual shouted ?Long live Hamas? at a synagogue during Simchat Torah celebrations.", "City": "Clifton", "State": "New Jersey", "Incident Type": "Harassment", }, )) print(is_duplicate( { "Date of Incident": "10/7/23", "Description": 'After noticing two visibly Jewish people speaking Hebrew, an individual followed the victims and screamed "Allahu Akbar."', "City": "Brooklyn", "State": "New York", "Incident Type": "Harassment", }, { "Date of Incident": "10/7/23", "Description": 'After noticing two visibly Jewish people speaking Hebrew, an individual followed the victims and screamed "Allahu Akbar" at them.', "City": "Brooklyn", "State": "New York", "Incident Type": "Harassment", }, )) print(is_duplicate( { "Date of Incident": "10/8/23", "Description": 'During an anti-Israel rally, protestors made anti-Zionist comments such as "Zionism has got to go," praised violence against Israeli civilians and displayed antisemitic symbols including a swastika and the flag of Hamas.', "City": "Manhattan", "State": "New York", "Incident Type": "Harassment", }, { "Date of Incident": "10/8/23", "Description": 'During an anti-Israel rally, protestors made anti-Zionist comments such as "Zionism has got to go," praised violence against Israeli civilians and displayed antisemitic symbols, including a swastika.', "City": "Manhattan", "State": "New York", "Incident Type": "Harassment", }, )) print("The follow are not duplicates and should return False:") print(is_duplicate( { "Date of Incident": "10/8/23", "Description": "Swastika graffiti was left on the garage door of a home.", "City": "St. Louis", "State": "Missouri", "Incident Type": "Vandalism", }, { "Date of Incident": "10/8/23", "Description": "Swastikas were spray-painted on a playground.", "City": "St. Louis", "State": "Missouri", "Incident Type": "Vandalism", }, )) print(is_duplicate( { "Date of Incident": "10/8/23", "Description": "An individual wearing a Nazi uniform entered a restaurant.", "City": "Forth Worth", "State": "Texas", "Incident Type": "Harassment", }, { "Date of Incident": "10/8/23", "Description": "Extremist individuals distributed antisemitic propaganda the Fort Worth Botanic Garden.", "City": "Forth Worth", "State": "Texas", "Incident Type": "Harassment", }, ))

And here's what it looks like when I run this script:

% python3 gpt4-test.py

The follow are real duplicates and should return True:

True

True

True

The follow are not duplicates and should return False:

False

False

The is_duplicate() function that GPT-4 wrote me appears to work. So I added it into my extract-incidents.py script.

Following the code that de-dupes exact matches, I wrote the following comment:

# Some of the rows are exact matches except they have different "Description" values. # Use the is_duplicate function to dedupe these, keeping the row with the higher index.

GitHub Copilot auto-suggested the following code to do the rest of the de-duping:

for i, row1 in enumerate(rows): for j, row2 in enumerate(rows): if i < j: if is_duplicate(row1, row2): rows.pop(j)

In all, here is my final extract-incidents.py file:

import json import csv import difflib def is_duplicate(row1, row2): # Check if any field other than 'Description' is different for key in row1: if key != "Description" and row1[key] != row2[key]: return False # Compare descriptions using a simple similarity measure similarity = difflib.SequenceMatcher( None, row1["Description"], row2["Description"] ).ratio() # Define a threshold for similarity (e.g., 0.8) threshold = 0.8 return similarity >= threshold def dedupe_exact_matches(items, key): seen = set() result = [] for item in items: val = tuple(item[k] for k in key) if val not in seen: seen.add(val) result.append(item) return result def main(): # Load html from file with open("map.html") as f: html = f.read() # Extract data from HTML for line in html.split("\n"): if "_Flourish_data = " in line: data_json = line.replace("_Flourish_data = ", "") data_json = data_json.strip()[:-1] # strip trailing comma data = json.loads(data_json) # Save data points as CSV rows = [] headers = [ "Date of Incident", "Description", "City", "State", "Incident Type", ] with open("adl-data.csv", "w") as f: writer = csv.DictWriter(f, fieldnames=headers) writer.writeheader() for point in data["points"]: row = { "Date of Incident": point["metadata"][0], "Description": point["metadata"][1], "City": point["metadata"][2], "State": point["metadata"][3], "Incident Type": point["metadata"][4], } rows.append(row) writer.writerow(row) print(f"Number of rows: {len(rows)}") # Dedupe rows = dedupe_exact_matches(rows, headers) print(f"Number of rows after de-duping exact matches: {len(rows)}") # Some of the rows are exact matches except they have different "Description" values. # Use the is_duplicate function to dedupe these, keeping the row with the higher index. for i, row1 in enumerate(rows): for j, row2 in enumerate(rows): if i < j: if is_duplicate(row1, row2): rows.pop(j) print(f"Number of rows after de-duping similar descriptions: {len(rows)}") # Save the de-duped CSV with open("adl-data-deduped.csv", "w") as f: writer = csv.DictWriter(f, fieldnames=headers) writer.writeheader() for row in rows: writer.writerow(row) if __name__ == "__main__": main()

And here's what it looks like when I run it:

% python3 extract-incidents.py

Number of rows: 1502

Number of rows after de-duping exact matches: 1263

Number of rows after de-duping similar descriptions: 1226

The final adl-data-deduped.csv spreadsheet contains 1,226 rows of data.

Note that this is more data than from my article in The Intercept -- when I pulled this data on November 9 for that article, the spreadsheet had 1,163 rows of data. In the last 4 days, ADL has added 63 rows to their public dataset -- but, due to their conflation of antisemitism and anti-Zionist peace activism, it's hard to immediately know if this new data is the result of neo-Nazis, Jewish social justice activists, or something else.

Counting rows in the spreadsheet again

Now that I have a de-duped version of the spreadsheet, I have counted the rows one more time. Here's what I found:

- Anti-Israel Rally: 448 (36.5%)

- Anti-Israel Rally w. Support for Terror: 170 (13.9%)

- Assault: 30 (2.4%)

- Harassment: 406 (33.1%)

- Vandalism: 172 (14.0%)

If you're interested in what ADL means when they classify "anti-Israel rallies" as "with support for terror," go read my original reporting. ADL told me their criteria.

The rest of the journalism

Extracting this data from ADL's online map -- what I've described in this blog post -- was just half of the work. The other half involved a lot of traditional investigative journalism work, including:

- Contacting ADL and asking them questions about their "antisemitic incidents and anti-Israel rallies" dataset, including specifically about their choice to include Jewish peace rallies.

- Contacting organizers from the progressive Jewish groups IfNotNow and Jewish Voice for Peace to let them know that they're lumped in with hate groups by the ADL, and see what they have to say about it. Also, asking them for help identifying protests and direct actions around the US that were organized exclusively by Jews, or that were co-sponsored by Jews along with other groups.

- Making a comprehensive list of social media accounts, mostly on Facebook, Instagram, and X, run by national and regional chapters of IfNotNow and Jewish Voice for Peace -- my list ended up with containing 71 different accounts.

- Scouring every single post, on every single social media account, since October 7 looking for "anti-Israel rallies" in the ADL dataset that were organized by Jews -- I spent at least a solid 8 hours doing this.

I kept track of all of this in a spreadsheet, marking which rows were confirmed organized by Jews, along with links to social media posts showing the event flyers with Jewish orgs listed as co-sponsors, and other links to media from the Jewish-organized protests. Once I had collected this data, along with relevant quotes from ADL and Jewish activists, I sat down and wrote my article.

Hacks, Leaks, and Revelations

This particular ADL dataset is completely public. Everyone has access to it -- that is, everyone who has the right skills -- and it wasn't a hacked or leaked dataset. But the data was buried inside a web page, inaccessible to most people. The skills it takes to analyze this are exactly the same as the skills you need to analyze hacked and leaked datasets.

If you want to get good at this sort of work, check out my book Hacks, Leaks, and Revelations: The Art of Analyzing Hacked and Leaked Data. I wrote this book for journalists, researchers, hacktivists, and anyone else who wants to learn the technologies and coding skills required to investigate hacked, leaked, and entirely public datasets like this one. While it gets pretty technical, it's written for beginners with no prior knowledge required.

Subscribe to feed

Subscribe to feed